Running Vorpal in the Cloud¶

Note

You will need to setup a separate cloud account in order to run Vorpal in the Cloud. These instruction are for using the Amazon Web Services cloud provider, which has nice tools for setting up an HPC Cluster.

Running Vorpal in the Amazon cloud or AWS (Amazon Web Services) on a cluster is now possible. These instructions will show how to setup a user account with the correct permissions, create a storage area, download the ParallelCluster tools, and configure and start a cluster.

These instructions are focused on the minimum needed to get the user up and running on AWS. For more detailed instructions about HPC cluster please see:

The AWS High Performance Computing page.

The ParallelCluster Documentation page.

The Basic Structure of an HPC AWS Cluster¶

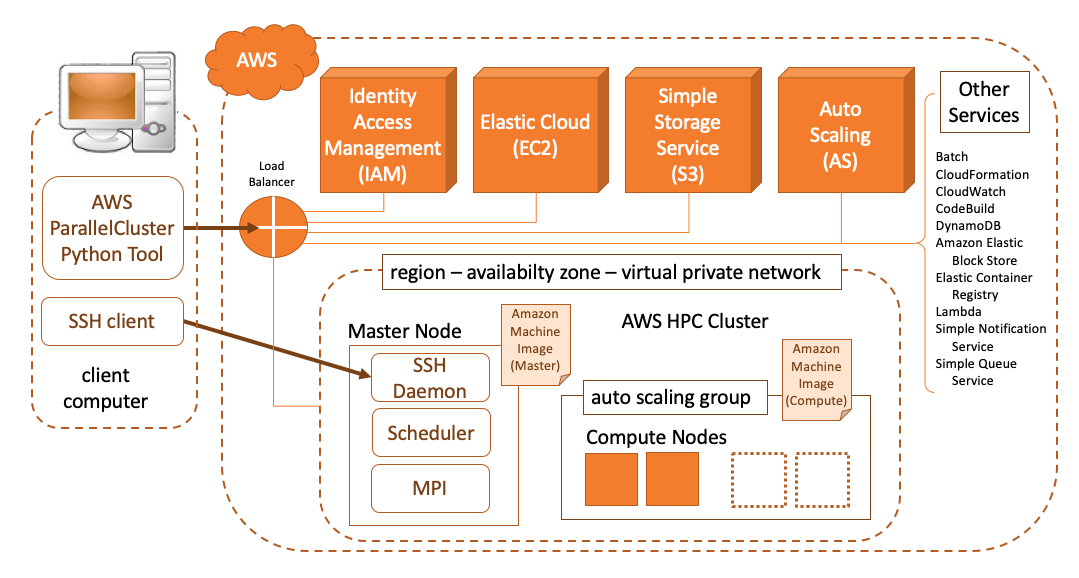

In the cloud, operations are done with Web Services, so starting a cluster involves interating with server AWS services. The full architecture diagram is shown in figure Fig. 99 below. In these instructions we will be referring to various services and you will need to interact with the website to use these service to setup the cluster.

Fig. 99 The Amazon AWS approach in constructing an HPC Cluster using Web Services and Machine Images that have pre-installed software and networking services that automatically scale the number of compute instances to the job scheduled on the master node.¶

Creating AWS Accounts¶

Before one can run Vorpal on the AWS cloud, at least two accounts have to be setup. The root account manages information for the organization (sets up credit card payments, adds other users, etc.), so this is the first account. The second account will be for the user that runs Vorpal and will need special permissions in order to create a cluster.

Creating a Root Account¶

To create a root account, follow these steps:

Goto the AWS homepage https://aws.amazon.com/

Click the Create a Free Account button.

Enter your account information, and then choose Continue.

Enter your Contact Information (company or personal).

Read and accept the AWS Customer Agreement.

Choose Create Account and Continue.

Enter your credit card information.

Confirm your identity for example with a text.

On the next screen, choose a plan (The basic will work).

The final screen will ask for your business role and interests.

Creating a User Account¶

We need to create two policies, two groups associated with these polices, and the a user that is a member of both groups. To do this, follow these steps:

Goto the AWS homepage https://aws.amazon.com/

Choose Sign in to the Console (upper right button)

Enter your root password

At this point, it is good to check your default region, which is shown in the top bar at the right. You will need use the code for this region to complete the configuration below. A table of Region Names and Region Codes is given at List of Regions Codes.

Now we create two policies:

Type IAM in the search box and then click on the link to the IAM Console

Note the AWS Account # at the top left of the page, you will need this later.

Click on the Policies item in the left navigation bar

Click the Create policy button, which will take you to the Create policy editor page.

In the Create policy page, click the JSON tab and cut-n-paste the JSON code below, replacing the <ACCOUNT_NUMBER> with the AWS account number noted above in two places and the <REGION> with your region in one place.

{

"Version": "2012-10-17",

"Statement": [

{

"Action": [

"ec2:DescribeKeyPairs",

"ec2:DescribeRegions",

"ec2:DescribeVpcs",

"ec2:DescribeSubnets",

"ec2:DescribeSecurityGroups",

"ec2:DescribePlacementGroups",

"ec2:DescribeImages",

"ec2:DescribeInstances",

"ec2:DescribeInstanceStatus",

"ec2:DescribeSnapshots",

"ec2:DescribeVolumes",

"ec2:DescribeVpcAttribute",

"ec2:DescribeAddresses",

"ec2:CreateTags",

"ec2:DescribeNetworkInterfaces",

"ec2:DescribeAvailabilityZones"

],

"Resource": "*",

"Effect": "Allow",

"Sid": "EC2Describe"

},

{

"Action": [

"ec2:CreateVolume",

"ec2:RunInstances",

"ec2:AllocateAddress",

"ec2:AssociateAddress",

"ec2:AttachNetworkInterface",

"ec2:AuthorizeSecurityGroupEgress",

"ec2:AuthorizeSecurityGroupIngress",

"ec2:CreateNetworkInterface",

"ec2:CreateSecurityGroup",

"ec2:ModifyVolumeAttribute",

"ec2:ModifyNetworkInterfaceAttribute",

"ec2:DeleteNetworkInterface",

"ec2:DeleteVolume",

"ec2:TerminateInstances",

"ec2:DeleteSecurityGroup",

"ec2:DisassociateAddress",

"ec2:RevokeSecurityGroupIngress",

"ec2:RevokeSecurityGroupEgress",

"ec2:ReleaseAddress",

"ec2:CreatePlacementGroup",

"ec2:DeletePlacementGroup"

],

"Resource": "*",

"Effect": "Allow",

"Sid": "EC2Modify"

},

{

"Action": [

"autoscaling:DescribeAutoScalingGroups",

"autoscaling:DescribeAutoScalingInstances"

],

"Resource": "*",

"Effect": "Allow",

"Sid": "AutoScalingDescribe"

},

{

"Action": [

"autoscaling:CreateAutoScalingGroup",

"ec2:CreateLaunchTemplate",

"ec2:ModifyLaunchTemplate",

"ec2:DeleteLaunchTemplate",

"ec2:DescribeLaunchTemplates",

"ec2:DescribeLaunchTemplateVersions",

"autoscaling:PutNotificationConfiguration",

"autoscaling:UpdateAutoScalingGroup",

"autoscaling:PutScalingPolicy",

"autoscaling:DescribeScalingActivities",

"autoscaling:DeleteAutoScalingGroup",

"autoscaling:DeletePolicy",

"autoscaling:DisableMetricsCollection",

"autoscaling:EnableMetricsCollection"

],

"Resource": "*",

"Effect": "Allow",

"Sid": "AutoScalingModify"

},

{

"Action": [

"dynamodb:DescribeTable"

],

"Resource": "*",

"Effect": "Allow",

"Sid": "DynamoDBDescribe"

},

{

"Action": [

"dynamodb:CreateTable",

"dynamodb:DeleteTable",

"dynamodb:TagResource"

],

"Resource": "*",

"Effect": "Allow",

"Sid": "DynamoDBModify"

},

{

"Action": [

"sqs:GetQueueAttributes"

],

"Resource": "*",

"Effect": "Allow",

"Sid": "SQSDescribe"

},

{

"Action": [

"sqs:CreateQueue",

"sqs:SetQueueAttributes",

"sqs:DeleteQueue",

"sqs:TagQueue"

],

"Resource": "*",

"Effect": "Allow",

"Sid": "SQSModify"

},

{

"Action": [

"sns:ListTopics",

"sns:GetTopicAttributes"

],

"Resource": "*",

"Effect": "Allow",

"Sid": "SNSDescribe"

},

{

"Action": [

"sns:CreateTopic",

"sns:Subscribe",

"sns:DeleteTopic"

],

"Resource": "*",

"Effect": "Allow",

"Sid": "SNSModify"

},

{

"Action": [

"cloudformation:DescribeStackEvents",

"cloudformation:DescribeStackResource",

"cloudformation:DescribeStackResources",

"cloudformation:DescribeStacks",

"cloudformation:ListStacks",

"cloudformation:GetTemplate"

],

"Resource": "*",

"Effect": "Allow",

"Sid": "CloudFormationDescribe"

},

{

"Action": [

"cloudformation:CreateStack",

"cloudformation:DeleteStack",

"cloudformation:UpdateStack"

],

"Resource": "*",

"Effect": "Allow",

"Sid": "CloudFormationModify"

},

{

"Action": [

"s3:Get*",

"s3:List*"

],

"Resource": "arn:aws:s3:::<REGIION>-aws-parallelcluster*",

"Effect": "Allow",

"Sid": "S3ParallelClusterReadWrite"

},

{

"Action": [

"iam:PassRole",

"iam:CreateRole",

"iam:DeleteRole",

"iam:GetRole",

"iam:TagRole",

"iam:SimulatePrincipalPolicy"

],

"Resource": "arn:aws:iam::<ACCOUNT_NUMBER>:role/vsim_cluster_role",

"Effect": "Allow",

"Sid": "IAMModify"

},

{

"Action": [

"iam:CreateInstanceProfile",

"iam:DeleteInstanceProfile"

],

"Resource": "arn:aws:iam::<ACCOUNT_NUMBER>:instance-profile/*",

"Effect": "Allow",

"Sid": "IAMCreateInstanceProfile"

},

{

"Action": [

"iam:AddRoleToInstanceProfile",

"iam:RemoveRoleFromInstanceProfile",

"iam:GetRolePolicy",

"iam:GetPolicy",

"iam:AttachRolePolicy",

"iam:DetachRolePolicy",

"iam:PutRolePolicy",

"iam:DeleteRolePolicy"

],

"Resource": "*",

"Effect": "Allow",

"Sid": "IAMInstanceProfile"

},

{

"Action": [

"efs:DescribeMountTargets",

"efs:DescribeMountTargetSecurityGroups",

"ec2:DescribeNetworkInterfaceAttribute"

],

"Resource": "*",

"Effect": "Allow",

"Sid": "EFSDescribe"

},

{

"Action": [

"ssm:GetParametersByPath"

],

"Resource": "*",

"Effect": "Allow",

"Sid": "SSMDescribe"

}

]

}

Click the Review Policy button, which takes you to the review step.

If there are no issues with the JSON, then enter the name vsim_user_policy, otherwise, click the Previous button and and fix any issues. Once you are ready to create the policy, click Create policy to complete the first policy.

Back in Policies page click the Create policy button for a second policy.

In the Create policy page, click the JSON tab and cut-n-paste the JSON code below, replacing the four <ACCOUNT_NUMBER> with the AWS account number noted above and replacing the five <REGION> with your region.

{

"Version": "2012-10-17",

"Statement": [

{

"Action": [

"ec2:DescribeVolumes",

"ec2:AttachVolume",

"ec2:DescribeInstanceAttribute",

"ec2:DescribeInstanceStatus",

"ec2:DescribeInstances",

"ec2:DescribeRegions"

],

"Resource": [

"*"

],

"Effect": "Allow",

"Sid": "EC2"

},

{

"Action": [

"dynamodb:ListTables"

],

"Resource": [

"*"

],

"Effect": "Allow",

"Sid": "DynamoDBList"

},

{

"Action": [

"sqs:SendMessage",

"sqs:ReceiveMessage",

"sqs:ChangeMessageVisibility",

"sqs:DeleteMessage",

"sqs:GetQueueUrl"

],

"Resource": [

"arn:aws:sqs:<REGION>:<ACCOUNT_NUMBER>:parallelcluster-*"

],

"Effect": "Allow",

"Sid": "SQSQueue"

},

{

"Action": [

"autoscaling:DescribeAutoScalingGroups",

"autoscaling:TerminateInstanceInAutoScalingGroup",

"autoscaling:SetDesiredCapacity",

"autoScaling:UpdateAutoScalingGroup",

"autoscaling:DescribeTags",

"autoScaling:SetInstanceHealth"

],

"Resource": [

"*"

],

"Effect": "Allow",

"Sid": "Autoscaling"

},

{

"Action": [

"cloudformation:DescribeStacks",

"cloudformation:DescribeStackResource"

],

"Resource": [

"arn:aws:cloudformation:<REGION>:<ACCOUNT_NUMBER>:stack/parallelcluster-*/*"

],

"Effect": "Allow",

"Sid": "CloudFormation"

},

{

"Action": [

"dynamodb:PutItem",

"dynamodb:Query",

"dynamodb:GetItem",

"dynamodb:DeleteItem",

"dynamodb:DescribeTable"

],

"Resource": [

"arn:aws:dynamodb:<REGION>:<ACCOUNT_NUMBER>:table/parallelcluster-*"

],

"Effect": "Allow",

"Sid": "DynamoDBTable"

},

{

"Action": [

"s3:GetObject"

],

"Resource": [

"arn:aws:s3:::<REGION>-aws-parallelcluster/*"

],

"Effect": "Allow",

"Sid": "S3GetObj"

},

{

"Action": [

"s3:PutObject"

],

"Resource": [

"arn:aws:s3:::<REGION>-aws-parallelcluster/*"

],

"Effect": "Allow",

"Sid": "S3PutObj"

},

{

"Action": [

"sqs:ListQueues"

],

"Resource": [

"*"

],

"Effect": "Allow",

"Sid": "SQSList"

},

{

"Action": [

"iam:PassRole",

"iam:CreateRole"

],

"Resource": [

"arn:aws:iam::<ACCOUNT_NUMBER>:role/parallelcluster-*"

],

"Effect": "Allow",

"Sid": "BatchJobPassRole"

}

]

}

Click the Review Policy button, which takes you to the review step.

If there are no issues with the JSON, then enter the name vsim_creator_policy, otherwise, click the Previous button and and fix any issues. Once you are ready to create the policy, click Create policy to complete the first policy.

Now we create two groups for the two policies:

Click on the Groups item in the left navigation bar

Click Create New Group, enter the name vsim_user_group, and then click the Next Step button.

In the Attach Policy page, enter vsim in the Policy Type search box, then check the checkbox by vsim_user_policy, and click the Next Step button.

In the Review page, click the Create Group button

Click the Create New Group button a second time, but this time name the group vsim_creator_group and attach the vsim_creator_policy policy.

Next, we add a Role for the EC2 service to use to be able to access the AWS services:

Click on the Roles item in the left navigation bar

Click Create role, choose the EC2 Service to use the role. Click the Next: Permissions button.

Enter vsim in the search box to filter the policies to attach and select both the vsim_creator_policy and the vsim_user_policy, then click Next: Tags.

No tags are necessary, so if you don’t want any, click Next: Review.

Enter the Role name, vsim_cluster_role and click Create role

Create one more role. Click Create role, choose the EC2 Auto Scaling as the service and then chose EC2 Auto Scaling as the use case.

Click Next: Permissions, Next: Tags, Next: Review, and then Create role.

We then add the user:

Click the Users item in the left navigation bar

Click the Add User button

Enter a user name and select the Programmatic access option and then click the Next:Permissions button.

In the Add user to group section, check both the vsim_users_group and the vsim_creator_group checkboxes. Then click the Next: Tags button.

No tags are required, so you can move on to click the Next: Review button and if all is correct, then click the Create user button.

The next page should have a green box with the word Success in it. Keep this page open because it is the only time you will get access to the secret key for the user. And you will want to make a file on your local computer with this key at this time. To do this, make a file ~/.aws/config with the following:

$ mkdir ~/.aws

$ touch ~/.aws/config

$ chmod 600 ~/.aws/config

Then edit this “config” file. Copy the following contents into the config file and replace the <ACCESS_KEY_ID> and the <SECRET_ACCESS_KEY> from the browser and <REGION> from the region you are using.

[default]

aws_access_key_id=<ACCESS_KEY_ID>

aws_secret_access_key=<SECRET_ACCESS_KEY>

region=<REGION>

This file will be used later to contact the various Amazon Web Services needed to create a cluster.

You can also download a comma-separated-values (.csv) file with the user’s creditials to save the access key information.

Create a Long Term Storage Space¶

These instructions take you through setting up an S3 storage bucket and uploading VSim to it.

Go to the S3 dashboard, by choosing Services->Storage->S3

Click the Create Bucket button

Enter unique name for the bucket and note it for later as <BUCKET_NAME> and ensure that the region is the same as <REGION>, then click the Create button in the lower left (not the Next button in the lower right).

In the list of buckets, click on the link to your bucket.

Click the Upload button and then the Add files button. Navigate to where your VSim Linux installer (VSim-11.0.0-Linux64.tar.gz) is located. Then click the Add more files link and this time navigate to the license file you have been given.

Click Next. Ensure that your AWS user account is the owner and has full permissions to Read and Write. Click Next.

Choose Standard for the Storage Class unless you know why would otherwise. Click Next.

Review the selections and click Upload if ready.

Create a Short Term (mountable) Storage System¶

Short term storage is only used during the cluster instance operation. Files are sync’d back and forth to the long term storage on cluster creation and deletion. There are two basic options for this storage: (1) EFS, an NFS type system; and (2) FSx, a Lustre high performance system. Option 2 is more expensive, but more performant and you can create both and go back and forth between clusters with one or the other. So, we give instructions for using EFS first.

Go to the EFS dashboard, by choose Services->Storage->EFS

Ensure you have the region choice in the top bar to be your region.

Click the Create file system button

The next screen is a review of the default settings. These will be fine unless you know that you want something different. So, click the Next Step button.

In the Configure optional settings page, scroll down to the Choose performance mode section and check Max I/O option, which will be needed for clusters with many compute nodes. Click the Next Step button.

In the Review and create page, you will see the Virtual Private Cloud (vpc) that the file system is within and this includes a set of Availability Zones (subnets) within your region. This is good to be aware of and these IDs will be used later in configuring your cluster.

When you are done reviewing, click Create File System.

Create key pair to use with SSH¶

Go to the EC2 dashboard, by choosing Services->Compute->EC2

Click on the Key Pairs link in top-middle of the page.

At this point we need to upload a public key. Usually, this is in the ~/.ssh directory. On Mac, one can not navigate to the directory via the web browser, we can copy the public key to a directory that is available, like Documents.

$ cp ~/.ssh/id_rsa.pub ~/Documents

Click the Import Key Pair button name.

Next to the Load public key from file option, click the Choose File button and then find the public key in the Documents directory.

Change the name to vsimcluster.

Click the Import button.

Downloading the ParallelCluster tools¶

These instructions describe how to download a Python 3 based tool from AWS needed to lauch a cluster from the command-line:

Download and install Python 3 if you don’t already have it. On Mac, this can be done with homebrew:

$ python --version

$ brew install python3 # if not version 3.7

Ensure that python3 is first in your PATH then install the cluster tools. For example, on Mac you would do the following:

$ which pip3 # should return a full path to python3 distribution

$ pip3 install aws-parallelcluster --upgrade --user

$ export PATH=~/Library/Python/3.7/bin:$PATH

$ which pcluster # should return a full path

On linux, python puts pip installed packages in ~/.local/bin and this is likely already in your path, so there would be no need to for the export line.

After pcluster is installed create the configuration directory and file (~/.parallelcluster/config) with the following contents, replacing XXXXXXXX with the vpc and subnet IDs. The vpc id can be found at the EC2 dashboard by clicking on the Default VPC link in the upper right of the page. And in the VPC Dashboard, click on the Subnets link in the left navigation bar. You should see a subnet ID for each Availabilty Zone. Choosing the first one is fine.

- ::

[aws] aws_region_name = <REGION>

[cluster default] key_name = vsimcluster initial_queue_size = 0 max_queue_size = 16 min_queue_size = 0 placement_group = DYNAMIC cluster_type = spot base_os = centos7 scheduler = slurm master_instance_type = c4.4xlarge compute_instance_type = c4.4xlarge vpc_settings = vsimvpc ebs_settings = vsimebs s3_read_write_resource = arn:aws:s3:::<BUCKET_NAME>*

[vpc vsimvpc] vpc_id = vpc-<VPC_ID> master_subnet_id = subnet-<SUBNET_ID>

[ebs vsimebs] shared_dir = /vsim volume_type = gp2 volume_size = 100

[global] cluster_template = default update_check = true

[aliases] ssh = ssh {CFN_USER}@{MASTER_IP} {ARGS}

Creating a Cluster¶

At this point, you should be setup to start an AWS Cloud Cluster with the ParallelCluster tool.

- ::

$ export PATH=~/Library/Python/3.7/bin:$PATH $ pcluster create mycluster

This will take several minutes. You can watch for the Master node being created under the Running Instances link on the EC2 Dashboard. Also, you may receive a message on your first startup saying that your “request for accessing resources in this region is being validated”

When the prompt returns, the login information will be printed out. Use that login information (account and public IP) to login, with a command like:

- ::

You should not need a password because you will be using your private/public key pair that you imported above. Once you are log into the cluster, you can look around with commands like the following

- ::

$ ls / $ which sbatch $ sinfo

You should see the /vsim file system and that the scheduler is present and working. You can try a simple slurm batch script with the following contents:

- ::

#!/bin/sh #SBATCH –job-name=test #SBATCH –output=test.out #SBATCH –error=test.err #SBATCH –nodes=2 #SBATCH –ntasks=4 #SBATCH –time=00:01:00 /bin/hostname srun -l /bin/hostname srun -l which mpirun

And submit it with (Do we need the –nodelist=”$SLURM_JOB_NODELIST” arg???)

- ::

$ sbatch test.slm

You can view its progress with the squeue command:

- ::

$ squeue

And you can check that compute nodes are started by going back to the EC2 Running Instances page in the browser. When the job is completed, you should see the standard output (test.out) and and standard error (test.err) files. The error file should be empty and the output file should have hostnames and paths to MPI.

Running A Job on the Cluster¶

If the cluster is working well, and you are confident of how to run a batch slurm job, then you can setup VSim on the cluster. We do this by sync’ing the S3 storage with the EFS share.

- ::

$ aws s3 sync s3://<BUCKET_NAME> /vsim $ cd /vsim $ tar xzvf VSim-11.0.0-Linux64.tar.gz $ cp vsim11.txlic VSim-11.0/licenses/license.txt

And then to test running VSim, copy an example directory and translate the SDF file with:

- ::

$ cd /vsim $ mkdir empulse/ $ cp VSim-11.0/Contents/examples/VSimBase/VisualSetup/emPulseInVacuum/* empulse/ $ source VSim-11.0/VSimComposer.sh $ cd empulse $ sdf2vpre -s emPulseInVacuum.sdf -p emPulseInVacuum.pre

Here is a slurm batch script to run a job on the cluster with this input file:

- ::

#!/bin/bash #SBATCH –job-name=empulse #SBATCH –output=empulse.out #SBATCH –error=empulse.err #SBATCH –nodes=2 #SBATCH –ntasks-per-node=2 #SBATCH –ntasks=4 #SBATCH –time=00:10:00

source /vsim/VSim-11.0/VSimComposer.sh srun –hint=nomultithread –ntasks-per-node=16 vorpal -i emPulseInVacuum.pre -n 100 -d 50

If the file containing the above is named, empulse.slm, then this job is submitted with

- ::

sbatch empulse.slm

You can check on your job with

- ::

squeue

and you can stop the job with

- ::

scancel JOBID

where JOBID is the job id returned by squeue.

Shutting Down the Cluster¶

When you are through running you simulations on the cluster it should be shutdown with the following command:

- ::

$ export PATH=~/Library/Python/3.7/bin:$PATH $ pcluster delete mycluster